Why Containerize ML models ? ... A Guide to Modernizing your Workflow!

In this blog, we'll dive into the world of containerization, how containerization has become a game changer in modern software development and the specific benefits of containerizing Machine Learning models.

Introduction to containers :

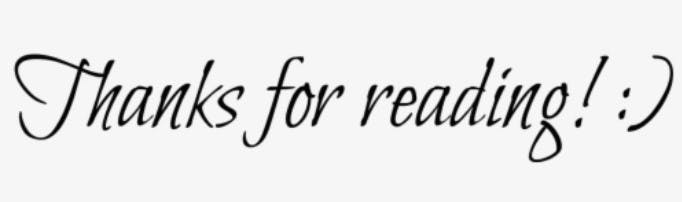

In the early days of computing, all the companies and organizations used to buy servers to run their applications. Each application would need a separate server. As user demand for these applications grew, the load on the server increased resulting in the companies acquiring additional servers to run numerous applications. This leads to a lot of resource wastage and is not cost-effective.

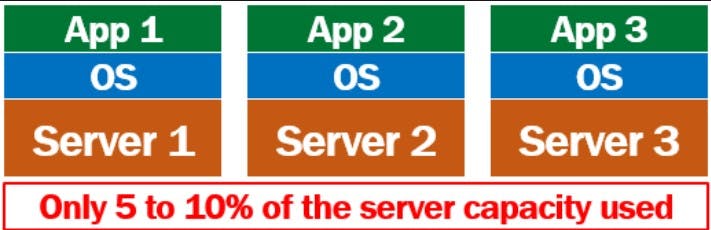

This problem has been solved by " Virtual Machines ", developed by VMware. These virtual machines allow us to run several applications on a single server, while each virtual machine necessitates its own dedicated allocation of resources, including an operating system, RAM, and CPU. Each application is run on a single virtual machine.

However, virtual machines still have certain problems, such as:

Requirement of Separate Operating System

Code Migration issues

The problem of "Works on my machine, why not on other machines" due to dependency issues

When cloning a repository and setting up the project on the local system (open-source development), we get modules and version differences

These challenges have been effectively addressed by " Containers ". In the case of VMs, we have a host operating system and then on top of it a hypervisor. This hypervisor creates several virtual machines by dividing the resources such as hard disk and RAM. The applications are run inside these virtual machines. Whereas in the case of Containers, we have a host operating system, then there is a Container Engine on it, which creates separate containers that share the same Operating System. These containers are isolated environments where the applications are run.

Docker is a tool that helps in containerization

Docker is an open platform for developing, shipping and running applications. It provides the ability to package and run an application in a loosely isolated environment called a container.

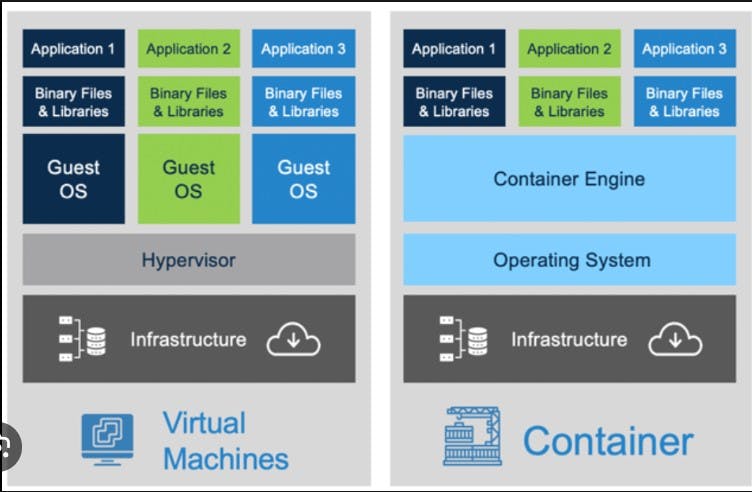

When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects.

A Dockerfile is a text-based script that contains a set of instructions for building a Docker container image. To build your own image, you create a Dockerfile, build it and run it.

An image is a read-only template with instructions for creating a Docker container. Often, an image is based on another image (base image), with some additional customization.

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI.

Now, Let's Talk about ML Model Containerization!

With a solid understanding of why containerizing applications is a game-changer, let's explore how this concept translates into the realm of machine learning.

Benefits of ML Model Containerization:

Portability

Containerizing ML models makes them highly portable. You can develop models on your local machine, package them in a container, and then they can be run on any system that supports Docker.

Version Control

Containers can encapsulate specific versions of your ML models and their dependencies. This simplifies the process of reverting to previous versions if issues arise.

Consistency in Deployment

Ensuring consistency between your development and production environments is crucial for ML model deployment. Containers guarantee that the environment in which your model runs is identical, reducing potential issues due to environmental differences.

Scalability and Load Balancing

When the load increases on your ML application, container orchestration tools like Kubernetes can help you scale up containers easily and scale down when more applications are not in use, distributing the workload efficiently.

Reproducibility

Reproducibility simplifies collaboration by ensuring consistent setups. You can share a container image, ensuring that others can replicate your environment effortlessly.

Cross-Platform Compatibility

A containerized ML model can run consistently on various operating systems and cloud platforms, regardless of differences in underlying infrastructure.

Containerizing a Simple Linear Regression Model: A Step-by-Step Guide

If you haven't already installed Docker on your system, you can follow the official Docker documentation to download and install it: Docker installation guide

In this section, we'll walk through the process of containerizing a Simple Linear Regression model using Docker.

Step 1: Set Up Your Project Directory

Create a new directory for your project and navigate to it in your terminal.

mkdir ml_model

cd ml_model

Step 2: Write Your Simple Linear Regression Model

Write a Python script, such as "simple_linear_regression.py" or "simple_linear_regression.ipynb", containing the code for your simple linear regression model.

Link to simple_linear_regression model code.

Step 3: Create a Requirements File, such as "requirements.txt " in your project directory

scikit-learn==1.0

numpy==1.19.5

pandas==1.3.4

matplotlib==3.4.3

seaborn==0.11.2

scipy==1.7.1

Step 4: Write a Dockerfile: Name the file as "Dockerfile" without any extensions

- Running a normal Python script:

If you have converted your notebook file ".ipynb" to a ".py" file then use the below instructions in your Dockerfile

FROM python:3.8

WORKDIR /app

COPY requirements.txt /app/

RUN pip install --no-cache-dir -r requirements.txt

COPY . /app

CMD ["python", "simple_linear_regression.py"]

- Running a Jupyter Notebook based python script:

To enable visualizations such as scatter plots and graphs when running a Jupyter Notebook-based Python script inside a Docker container, you can make use of Jupyter's web interface by running a Jupyter Notebook server inside the container.

Create a "Dockerfile" that includes Jupyter Notebook support.

Here's an example of a modified Dockerfile:

FROM python:3.8

WORKDIR /app

COPY . /app

RUN pip install --no-cache-dir -r requirements.txt

RUN pip install jupyter

EXPOSE 8888

CMD ["jupyter", "notebook", "--ip=0.0.0.0", "--port=8888", "--no-browser", "--allow-root"]

Step 5: Build and Run Your Docker Container

For Python script:

docker build -t simple-linear-regression .

docker run simple-linear-regression

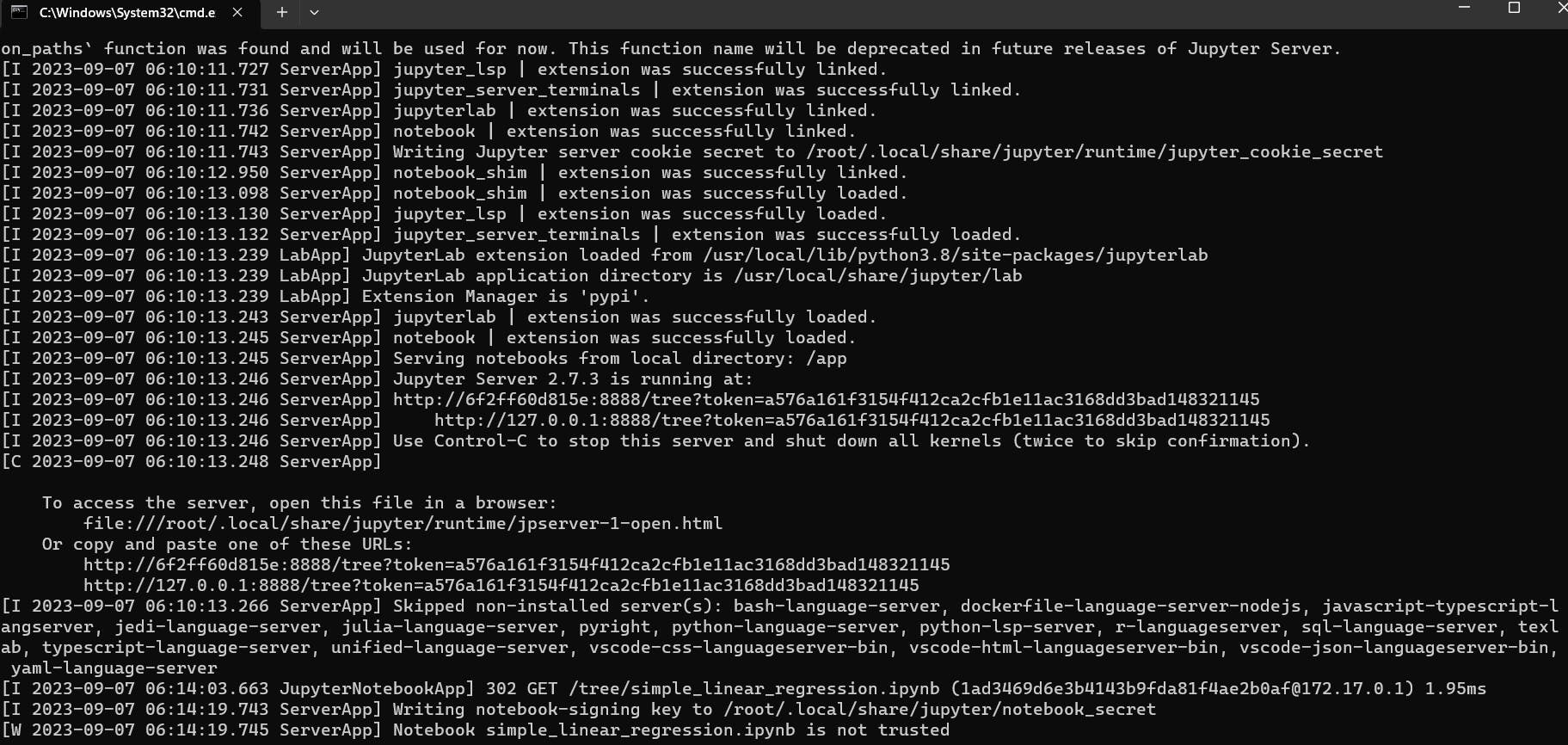

For Jupyter Notebook-based Python script:

docker build -t simple-linear-regression .

docker run -p 8888:8888 simple-linear-regression

You should see the output of your Simple Linear Regression model, including the predicted value in the cmd for the Python file and on the Jupyter server for the notebook file

Output for the Jupyter-Notebook based container :

Conclusion:

I hope you understand the benefits of containerizing ML models, from enabling seamless deployment and scalability to enhancing collaboration and ensuring reproducibility. Containerization is an important step toward building efficient and versatile machine-learning models. This not only optimizes the deployment process but also aligns with modern DevOps practices